Archive

Partitioned Table Spinlocks

I was recently working on development of a fact table with 24 partitions and needed to hold up to 5 billion rows of data.

The data received in the warehouse is through a file being loaded into a staging heap table. One heap table is created per file, and there are several thousand files per day.

In the process of testing the load, I came across what I think was an interesting find.

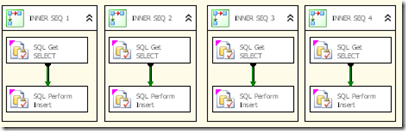

For this part we are going to adopt loading one slice into the mail fact table at a time. This way we have a very controllable method of consuming server resources along with making the process as parallel as we desire. For the initial cut, I will build an SSIS package that has 24 execute sql statements, where each one will firstly select into a variable the insert statement (this makes testing much simpler) and then each of these will flow to another 24 execute sql tasks which will run the insert.

Example of 4 threads shown here

If this is a successful method then we can easily add/change the control flow to operate off a stack of slices to load into the fact table.

On running the first test, while I expected some drop off when loading concurrently over 24 threads (one per partition) I noticed straight away that there was a large drop in loading speeds when, which was unexpected.

Load times for the run of 3.2 million rows was around 17 seconds over a number of runs, which given 1 thread running on it’s own took 1 second, we clearly had a problem. I then decided to try running the package on the server itself. Given that we are only doing two execute sql statements per thread I didn’t imagine to yield much improvement. This actually resulted in a slower execution time of 26.8 seconds.

Interestingly, the CPU usage was quite a lot higher (around 10% higher) when this was executing on the server compared with my 2 core desktop.

I then started looking into wait stats, latches and spins which showed very little until it came to the spinlocks. Below is an screenshot of latches, waits and spins

It is clear from the spinlock SOS_OBJECT_STORE that something is happening that perhaps shouldn’t be. We have a spin count of over 2 billion. Other runs of the test actually showed a much higher number of over 4 billion.

At this point I decided to perform some research on this particular type of Spinlock and found very little information. However, a blog by Thomas Kejser from SQLCAT Team (http://blog.kejser.org/2011/05/30/diagnosing-and-fixing-sos_object_store-spins-for-singleton-inserts/) Although this is related to Singleton inserts, following the diagnostic path is useful.

I thought from here that xPerf would be a good tool to give me a hint of which direction to go in, so after installing Windows Performance Toolkit on the server, I started logging in xPerf with the command

xperf -on latency -stackwalk profile

I then reran the workload and stopped xPerf with

xperf -d c:\temp\my.etl

As this was the first time of using xPerf and xPerfViewer on the server I set the symbols and symbols cache paths and started xPerfviewer.

After loading the file into xperf and loading symbols, I added the CPU by Process to the display and chose a position where I saw high and erratic CPU and brought up the summary table. After drilling into the SQL Server process I finally saw what the source of the spinlocks could be. We have very high weightings for AcquireLocksForInsert, and also for InsertRecordOnPage (expected since we are actually inserting data), and LocatePageForInsert (Could be contention here due to the locking).

The screenshot below highlights the problem functions.

In order to resolve this, I decided to review what was actually happening on the inserts, i.e. what kind of locks were being taken.

It appeared in the lock dmv’s that millions of KEY locks were being taken during the load, which certainly explains the amount of time spent in the “AcquireLocksForInsert”

To resolve this two actions were taken:

- Change the CLUSTERED INDEX to not allow row/page locks.

CREATE CLUSTERED INDEX ix_cu_Target_partitioned

ON Target_table_partitioned (surrogate_key)

WITH (ALLOW_ROW_LOCKS = OFF, ALLOW_PAGE_LOCKS = OFF)

ON [ps_hash_pos_24](partition_key)

- Alter the destination table to set the LOCK_ESCALATION=AUTO, which according to BOL will allow lock escalation to the partition level.

ALTER TABLE [dbo].[Target_table_partitioned] SET (LOCK_ESCALATION = AUTO)

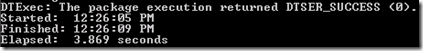

Running the test again we get much faster execution:

Also, much less time spent on “AcquireLocksForInsert”

This is also backed up by the locking during the inserts, where there are only 5 PAGE locks, which escalated to HOBT(partition) locks, 1 for each Partition

This gives us an insert speed into the fact table of around 1.2millions rows/second

Some other useful posts

Allen Mcquire SQL server spinlock reference

http://msdn.microsoft.com/en-us/library/ms184286.aspx

http://msdn.microsoft.com/en-us/library/ms190273.aspx

http://jmkehayias.blogspot.com/2008/12/troubleshooting-locking-in-database.html

http://msdn.microsoft.com/en-us/library/dd425070(v=sql.100).aspx

SQL Server TDE (Transparent Data Encryption)

A recent requirement on a project was to protect sensitive data while it was being shipped as a backup from the UK to the US.

The requirement was to ensure that if the data fell into the wrong hands that it would be unfeasible for the data to be reconstructed.

One approach for consideration was a new feature in SQL Server 2008 call transparent data encryption. To evaluate this, I decided that I would have to learn how to get it up and running and try and discover any pro’s and con’s before actually advising that we use it.

As you may have guessed from the title, TDE is transparent. That means that a user of the database should have no idea that the data on the disc is actually encrypted.

Considerations

Volume of data to be encrypted

- The larger the volume the longer it will take to do the initial encryption (this is only the case if you already have the data and need to apply encryption to it)

Table Compression

- There are some huge benefits, particularly with storage requirements to apply row/page compression to the data tables. In my instance the compressed table was 30% the size. So instead of using 16 TB of space we used 4.8 TB. The cost of this saving as you can imagine is significant!

- As encrypted data tends to be passed through an algorithm where every output is close to being unique this will cause the data to be much less compressible, so the savings you get for using row/page compression are minimal CPU

- Encrypting data requires each data item to be passed through an algorithm where the output is encrypted. This requires some CPU. I have noticed that for smaller databases this is almost immaterial Backup compression

- Similar to table compression problems. Encrypted data does not compress well

Ok, so how does it work.

So the encryption works on the basis of having an master key that is stored in the Master database. A named certificate that can be applied to a database, and an encryption algorithm,

REMEMBER TO BACKUP THE KEY

So below is a script on setting up TDE for the AdventureWorks2008R2 database

To Encrypt a database

(Insert stolen scripts from BOL here) (it did take time to get there btw) (and BOL was actually wrong in a few places)

| USE master;

GO CREATE MASTER KEY ENCRYPTION BY PASSWORD = ‘<UseStrongPasswordHere>’; CREATE CERTIFICATE MyServerCert WITH SUBJECT = ‘My DEK Certificate’; USE AdventureWorks2008R2; CREATE DATABASE ENCRYPTION KEY ALTER DATABASE AdventureWorks2008R2 USE AdventureWorks2008R2; /* The value 3 represents an encrypted state SELECT * |

To Backup the key

It is important that this happens. I believe there is a way if you know the passwords and certificate names, but I wasn’t brave enough to try it.

The key is backed up to the SQL data folder

| USE master; GO BACKUP CERTIFICATE MyServerCert |

To Remove Encryption from the server

| ALTER DATABASE AdventureWorks2008R2 SET ENCRYPTION OFF USE master; ALTER MASTER KEY DROP CERTIFICATE MyServerCert USE AdventureWorks2008R2; DROP DATABASE ENCRYPTION KEY USE master; DROP MASTER KEY; |

To complete the removal restart SQL Server service

You should also execute the following to confirm that certificate and keys are removed

| select * from master.sys.certificates

select * from sys.dm_database_encryption_keys |

It is also possible to move a TDE encrypted database. This is fairly straight forward if you managed to get this far, so pls see BOL.

Chris

In Addition to above, I have been pointed in the direction of the “SERVER MASTER KEY” by Andrew C. More info on this can be found here. It can be used to encrypt the database master key, so that everything is now encrypted.

SSAS Lock Types

As it usually quite difficult to identify what the various lock types are in Analysis Services and I always seem to forget, below are the lock types and id’s so that in the event of a deadlock, it is possible to identify what locks are present.

| Lock Type | Lock Name |

| 2 | Read – For processing |

| 4 | Write – For processing |

| 8 | CommitShared |

| 16 | CommitExclusive |

It is possible to take out a manual lock on the cube using the following syntax in an MDX window.

If you run the begin tran GO and the lock statement, this will take out a CommitShared Lock on the adventure works database. If you at this point execute the MDX

select *

from $system.discover_locks

Then you can see that there is a lock_type 8 taken as expected and a lock status of 1 meaning it has been granted.

If you now exectue a processing command for say a partition in another window it is then possible to see all of the locks that have been taken out as it will eventually try and get a lock at the server level (more info over here at Andrew Calvett’s blog).

The above screenshot (which might be difficult to see) shows that we are now waiting for a CommitExclusive lock at the database level which on the line below we can see has been granted out the lock that we took out explicitly. (You can also see the server/instance level lock in the window above also so that the master.mvp file can be updated).

You now have a choice to COMMIT TRAN or ROLLBACK TRAN which allows the processing in the other window to complete (or rollback)

Chris

There is more info on deadlocking over at John Lam’s blog which goes into detail on deadlocking in Analysis Services.

Also another reference to Andrew Calvett’s blog